“AI is coming…”

Ever since ChatGPT launched in November 2022, “AI” has come to be used as a shorthand for “large language models”, or LLMs. Actually, “LLM” is itself a shorthand, because many LLMs are actually “multi-modal” models whose purview spans some combination of text, imagery, audio, and perhaps even video. What these models all have in common is that they are: A) Generative, and B) Entirely digital — which means that their inputs and outputs are all things that can be channeled through a computer screen.1

The world has become obsessed with these things. It’s impossible to make it through a discussion on nearly any topic without someone commenting knowingly that “AI is coming, so…” Most often this statement is left hanging with implicit, unspecified, but assuredly momentous implications.

Just how earth-shattering LLMs will be remains to be seen. But, there are a number of fields in which they’re already proving transformational— for example: translation, software development, customer service, and of course illustration. LLMs have also shown great promise in the realm of search & synthesis — scanning through vast quantities of digital documents in order to extract and integrate disparate bits of information.2

This kind of tool can already replace a substantial amount of dull human labor. For example, my firm Energy Impact Partners just invested in a company called Atomic Canyon, which has honed an LLM to automate compliance documentation for nuclear power plants. There are currently 54 operational nuclear power plants in the United States, and “Neutron” could save thousands of hours of effort per year at every one of them.

I expect that products like this will rapidly permeate nearly all fields of “knowledge work”— beginning by automating the drudgery which doesn’t actually demand all that much knowledge.

But of course, there are much bigger theoretical uses for LLMs, provided that they continue to improve exponentially. And there are some very vocal, true believers who contend that “artificial general intelligence” (or some meaningful approximation thereof) is just around the corner. In fact, the CEO of OpenAI, Sam Altman, recently stated: “We are now confident we know how to build AGI as we have traditionally understood it… We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word.”

Personally, I find these sorts of claims difficult to evaluate (if not completely unfalsifiable).

Will allocating ever more computing power to AI models eventually lead to AGI, or perhaps even incomprehensible levels of superintelligence? Are LLMs on track to rival the civilizational impact of “fire and electricity”, or to warrant the consumption of “99% of total generation”?

Um… Maybe?

It’s easy to get bogged down in theoretical debates about superintelligence. (For example: What is it, exactly?) I personally enjoy this kind of idle speculation — preferably with a glass of whiskey, some black lights, and “Dark Side” playing in the background — but I’m skeptical that this leads to better practical decisions.

Thankfully, there is a much more practical branch of AI which is rapidly gaining momentum, and which has a much less speculative path to solving some of humanity’s biggest problems. This field is beginning to be referred to as “Physical AI”, and I’m convinced it has already begun transforming the world for the better.

Humanity’s biggest problems

Humans are still a material species, living in a material world, which means that our biggest problems — from security, to climate change, to disease — are still mostly material in nature. These problems can only be addressed obliquely by hordes of digital AI agents confined to a computer screen.

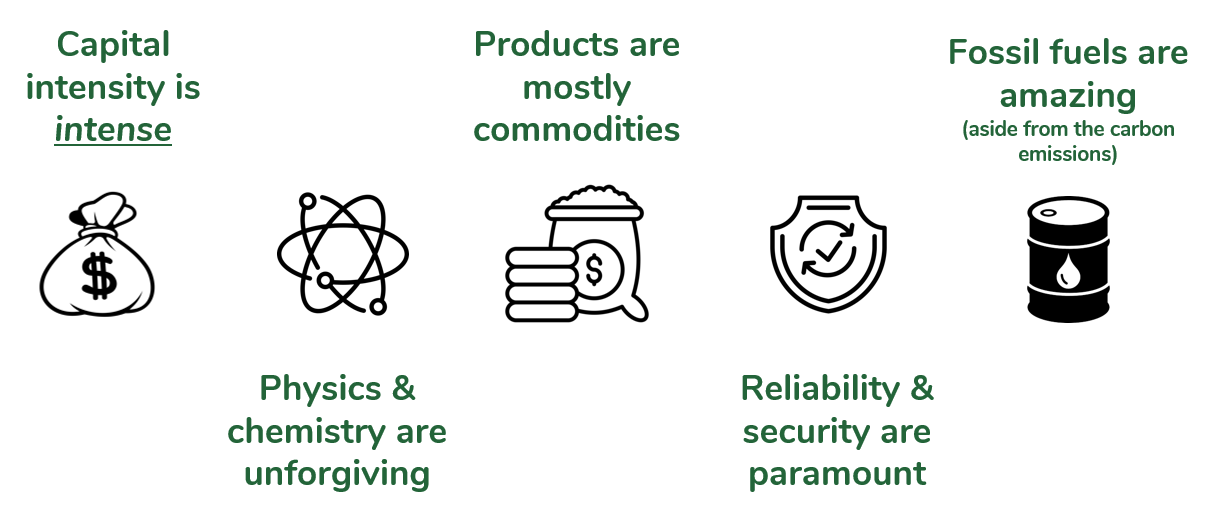

This is especially true in the case of climate and energy. In a recent post, I shared five reasons why the modern energy sector is such difficult terrain for anyone attempting to build a revolutionary technology company. All five stem from the fact that the industry is defined by big, physical systems, and the global flow of commodity materials.

There’s only so much that an LLM can do to overcome these barriers, no matter how many PhD level questions it can answer.

In fact, this principle extends well beyond energy. Across the economy, the biggest bottlenecks to growth are generally not PhD level bottlenecks. The digital revolution of the past 25 years has already made anyone working primarily with data & ideas — i.e. “knowledge workers” — tremendously more productive. As discussed above, LLMs are continuing this trend. During that same period, however, labor productivity in many “blue collar” fields has stagnated. In manufacturing and construction — which are the foundations of our ability to shape the physical world — labor productivity in the United States has been flat to declining for the past two decades.3

Compounding this problem is a related macro trend: demographic change. This trend has been an easy one to see coming, but has proven extremely difficult to counter. Demographics are now a challenge for essentially all technologically advanced, wealthy nations.

The last time the US embarked on a major national infrastructure spree, following World War II, the nation had an ideal demographic profile for such an endeavor. There was a bumper crop of children — courtesy of the famous “baby boom” — which proffered a steady supply of prime working age adults for the next few decades. But today, and our demographic profile is decidedly less favorable for a campaign of heavy lifting. Our pool of young people with strong backs is much shallower; and new restrictions on immigration are set to shrink the pool even further.

We’re already beginning to see these macro trends play out at a micro level in many individual sectors. Take solar power, for example. Back in 2022, the consulting firm McKinsey surveyed a group of project developers and utilities setting out to build large solar power portfolios, and found that this group’s top concern — even more so than inflation, or clogged interconnection queues — was the availability of labor.

All this is to say: I can only see one plausible option for addressing our society’s existential energy needs, and remaining globally competitive: We Americans (and Europeans, and Japanese…) need to take the next leap forward in physical labor productivity.

Towards “Physical AI”

Fortunately, many of the same advances in machine learning which have powered rapid progress in LLMs are also applicable to models whose I/O ports are directly plugged into physical sensors and actuators. This is the basic definition of “Physical AI”, and it’s not a new phenomenon. Since the dawn of the “deep learning” era, beginning around 2010, industrial operators have recognized that AI is much better at spotting patterns in machine data than humans. Hence, many of the first examples of Physical AI were software tools for identifying mechanical signatures in data flowing from industrial equipment, which could be used to facilitate more predictive approaches to operations & maintenance.

This trend has led equipment vendors to “sensor up” — especially in the case of equipment which is particularly expensive, or difficult to service, or both. For example, a large fleet of offshore wind turbines now generates about twelve times as much data, every year, as OpenAI used to train its landmark GPT-4 model.

Another good example in the energy sector is one of our portfolio companies at Energy Impact Partners called VIE Technologies. VIE is harnessing a treasure trove of data which is produced by nearly all industrial equipment. This data comes from machine vibration, and it’s largely unintelligible for humans.4 For an AI model, however, vibration is just another language to interpret… with varying dialects for different types of equipment. Hence, a number of companies have built vibration models to monitor the classes of equipment whose rattling tends to be the easiest to decipher: that means rotating equipment such as pumps, fans, and motors.

But as far as I’m aware, only VIE has taken this practice to the next level and developed a model capable of monitoring electrical equipment — specifically electric power transformers. Given bottlenecks in the supply chain for transformers, as well as other crucial electro-mechancial components of the power grid, catching problems in these assets before they become too damaging, and put other assets at risk, is mission-critical.

In addition to catching problems before they become too destructive, these types of solutions often lead to substantial labor cost savings. VIE, for example, enables equipment operators to avoid unnecessary “truck rolls” to check up on far-flung assets — or unexpected truck rolls to replace those assets at inopportune times.

Hence, I have a hard time imagining a better set of early applications for Physical AI.

Yet, in order to put a discernable kink in the aggregate labor productivity curve, AI tools which prioritize the deployment of a fully human labor force are just the beginning. We’re also going to need to embed AI in machines capable of interacting directly with the physical world.

Yes, I’m talking about ROBOTS.

So, forget all those vague proclamations that “AI is coming…” Robots are really coming — in fact, millions of them are already here — and it doesn’t require much imagination to foresee their revolutionary impact on society.

The robots are coming

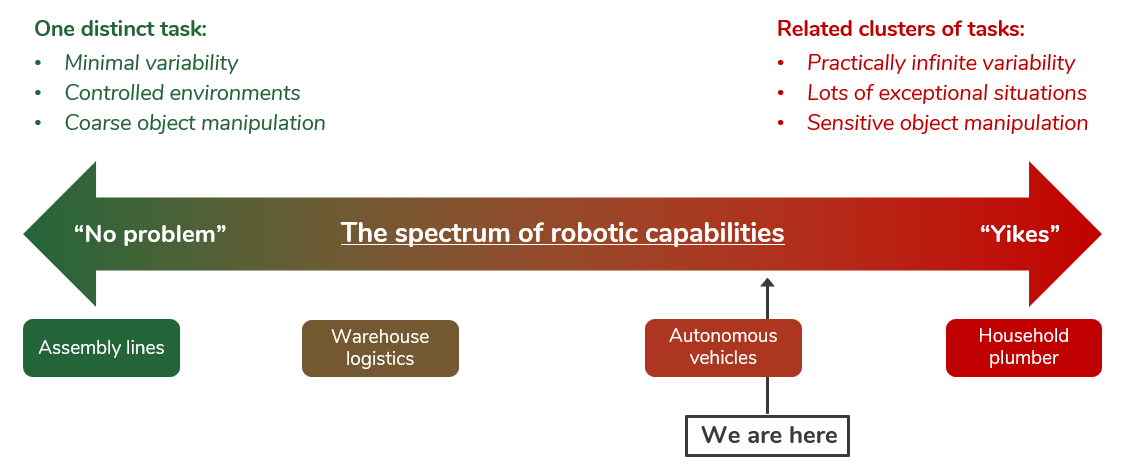

One way to understand the state of robotics is to picture a spectrum. This spectrum spans the full range of physical tasks which a robotic system might be called on to perform.

At one end of the spectrum are the easiest forms of labor to automate: discrete, repetitive tasks with minimal variability and a relatively coarse level of object manipulation. These are tasks which can be fully programmed as a series of concrete steps: Picture a robotic arm in a factory, which spends its days picking up uniform metal parts from the end of a conveyor belt, rotating them, and then stacking them in a pile.

Globally, there are roughly five million robots of this type in service today. They operate mostly in the automotive, electronics, and metal fabrication sectors. Sales have roughly quintupled since 2010, while the average price has fallen by about 50% to less than $20,000 an arm!5 If you’re a tinkerer, and you want to mess around with a robotic arm at home, you can easily find dozens of much cheaper, albeit less robust versions on Amazon.

Of course, as in many other industries, China is the biggest player in this sector. China has become the largest buyer of industrial robots, by far, and has nurtured a domestic industry which is now challenging even the top suppliers in Europe, Japan, and Korea. (For more on this point, check out “A tale of two energy superpowers”.)

Meanwhile, at the other end of The Spectrum, we find the most difficult forms of human labor to automate. The jobs to be done in this domain typically share a number of common attributes:

They tend to be clusters of tasks that are nearly impossible to disaggregate — which means that they tend to be performed by a single human worker or a cohesive group of workers.

They’re extremely variable. No two situations will be exactly alike.

They may involve interaction with the general population (who are not specifically trained to interact with a robot).

They may also involve very fine levels of object manipulation — which has long been a notoriously difficult problem for roboticists. See, for example, this headline from GE, from all the way back in 2017…

My mental model for this type of job is a plumber. Consider the variability that a typical plumber encounters on a typical household job site. For starters, he probably meets an upset customer (another messy human) with an ill-defined problem (e.g. “My sink is leaking, and I’m not quite sure where”).6 Then he enters a messy work environment — perhaps under a sink, or in a basement crawl space — and encounters a messy system, with components installed over the course of multiple decades. He typically needs to diagnose the problem with just a few observations. Then, finally, he needs to get to work on a solution, which probably requires a combination of agility, brute strength, and fine motor skills. For example, the plumber may need to snake his arm into an awkward space — while holding a wrench — in order to gently tighten a valve by just the right amount.

In other words: We are not yet approaching “Plumber” on the Spectrum of Robotic Capabilities.

However, in the past five years, we have made tremendous progress towards this end of the spectrum. The frontier of robotics has been propelled forward, dramatically, by three intersecting trends.

Trend #1: Deployment beyond highly-controlled factory settings

On the robotics spectrum, the next big step past assembly lines has been warehouses. Warehouses are also relatively controlled environments, but much less so than factories. They tend to require greater mobility, and superior agility in order to navigate changing circumstances and unplanned interactions with humans. For some warehouse tasks — e.g “picking & placing” goods — robots require refined grasping, which takes us back to the established challenge of building dextrous robotic hands. Nevertheless, in the past decade we’ve seen warehouse robots grow from an experiment into a cornerstone of the industry.

Take Amazon, for example. The company embarked on a robotics program beginning in 2012 with the acquisition of a warehouse automation startup, Kiva Systems. Now, Amazon may soon have more discrete robot workers than human employees; and while the number of robots is rising, the number of human employees has begun to decline. (Thanks to ArkInvest for pointing out this data.)

At Energy Impact Partners, we’ve invested in an emerging leader in this sector, RobustAI, which was founded by two robotics veterans focused on how robots can best augment human labor. Their product, “Carter”, appears to be just another humble warehouse cart, but this thing is actually an elegantly designed “cobot” built for collaboration with human workers.

Also, we need to talk about DRONES.

By “drones”, I mean those small, buzzing, electric, multi-rotor vehicles which you’ve probably been annoyed by in a park somewhere. You know the type — they’ve become mainstays of wedding photography. Through the 2010s, this particular robotic form factor became an extremely popular one for experimentation with autonomy, because: A) It flies, B) It’s versatile, and C) It’s cheap — or rather, it has become cheap as Chinese manufacturers have turned their attention to the sector.7

At first, these things were flown by remote control, but businesses quickly began to identify use cases which would benefit from some degree of autonomy. And so, drones became a kind of robotics playground. In the energy sector, for example, drones can serve as efficient tools for inspecting big, tall, and remote infrastructure — e.g. wind turbines, solar farms, and transmission towers — with help from machine vision software like GridVision, which was developed by one of our portfolio companies at EIP. Now, drones can even help construct and maintain these types of assets. For example, another portfolio company, Infravision, has developed a drone-based system for installing transmission lines. (Actually, most of the magic is in a ground-based “smart puller tensioner” system…)

Trend #2: Advances in AI… including “Generative” AI

For example: Back in March 2023, Google introduced a landmark model called “PaLM-e”, which integrates robotic sensor data with the more standard GenAI domains of vision and language. Per Google:

“We began with PaLM, a powerful large language model, and “embodied” it (the “E” in PaLM-E), by complementing it with sensor data from the robotic agent. This is the key difference from prior efforts to bring large language models to robotics — rather than relying on only textual input, with PaLM-E we train the language model to directly ingest raw streams of robot sensor data. The resulting model not only enables highly effective robot learning, but is also a state-of-the-art general-purpose visual-language model, while maintaining excellent language-only task capabilities.”

Meanwhile, the foundations of Physical AI have continued to advance independently from LLMs. Last year, for example, Stanford researchers published another landmark paper on new methods for training robotic systems with “imitation learning” using teleoperation. They demonstrated these techniques on a robot they named “Mobile ALOHA”, which was trained to cook shrimp, wipe up spills, open cabinets, and perform other basic household tasks.

Trend #3: A decade of investment in autonomous vehicles (or “AVs”)

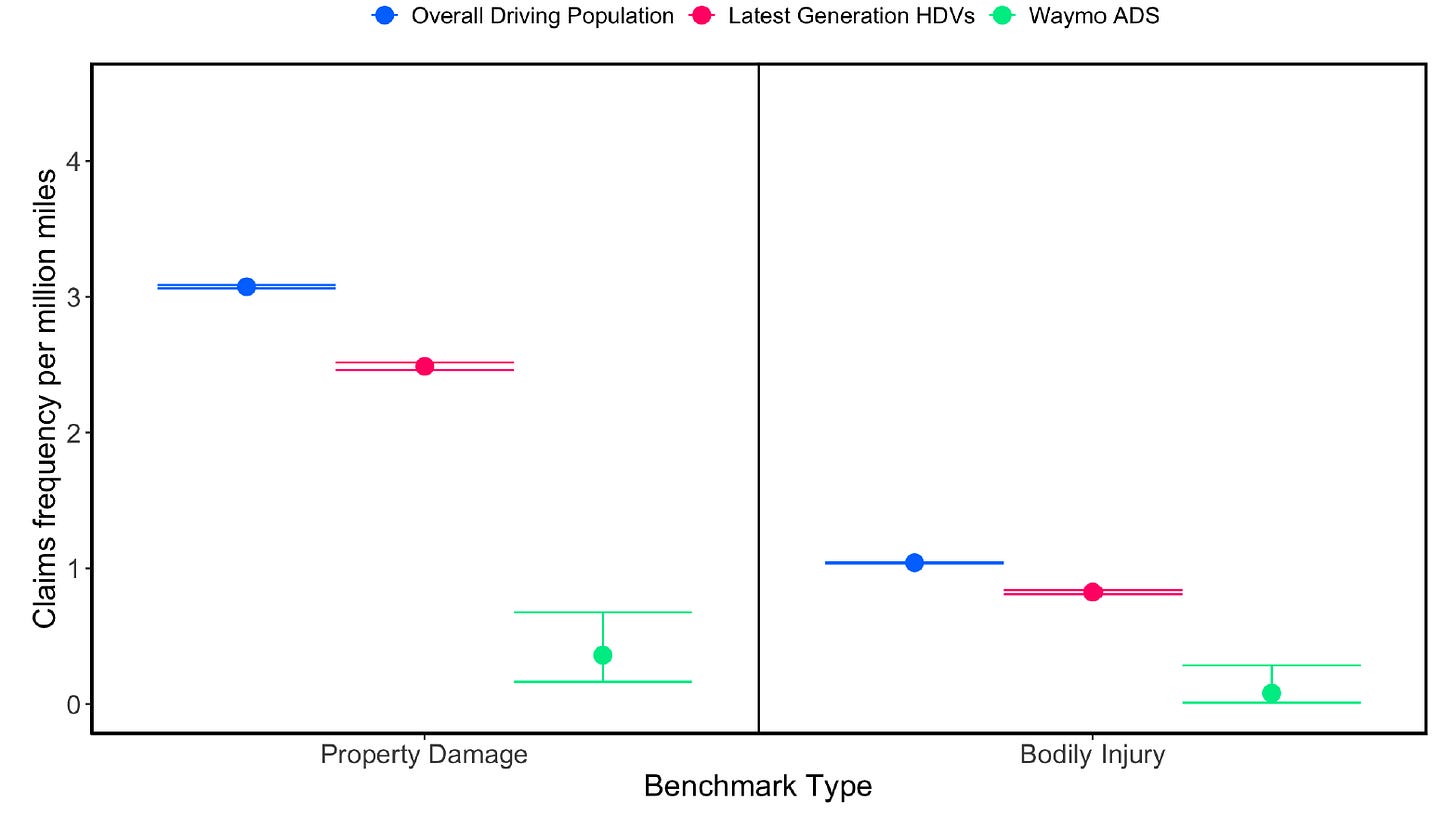

In a recent post, I discussed how autonomous vehicles have finally reached a commercial inflection point — mostly thanks to the pluck and detemination of just one company: Waymo (a spinout from Google, which is still majority-owned by Alphabet).

Autonomy is real now

“We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.” - Amara’s law

After more than fifteen years of effort, Waymo’s investment in autonomous vehicles is finally beginning to pay off. The company appears to have reached escape velocity with its autonomous taxi service, closing in on a million autonomous rides a month — which is a particularly remarkable given that Waymo just launched the service less than two years ago. Most importantly, the company is now armed with data on over 25 million rides, and a safety record which conclusively demonstrates the effectiveness of Waymo’s approach. This record should help accelerate Waymo’s expansion into new cities, by alleviating very reasonable public safety concerns.

Hence, on the Spectrum of Robotic Capabilities, Waymo has planted a flag much further to the right than any autonomous system that’s come before. While the range of specific tasks that an AV needs to perform is relatively narrow — “accelerate”, “decelerate”, “turn right”, “turn left” — city streets are some of the most unpredictable environments imaginable. The range of unusual situations a vehicle might encounter is effectively infinite. And of course, the consequences of even small mistakes can be catastrophic.

In order to reach this extraordinary inflection point, Waymo had to invest about $6 billion, while its competitors have invested tens of billions more.8 It should come as no surprise that such a flood of capital pouring into this field has generated positive ripple effects for adjacent sectors.

I believe that the robotics ecosystem may end up as the biggest beneficiary.

For example: AV development has bequeathed to the world a substantial cohort of “Physical AI” engineers who have now cut their teeth at the leading edge. Unsurprisingly, some of these engineers are now following the great Silicon Valley tradition of setting out to join companies… or launch new ones. This is a very strong tailwind for the commercial robotics sector.

AV development been an important catalyst for investment in LIDAR, a mode of “three dimensional” sensing with important advantages over purely optical sensors for machine vision. Thanks to the AV ecosystem, the cost of LIDAR sensors has fallen by more than 90% even as their performance has been improved.

And LIDAR is not the only common robotics component which has become a lot more affordable in the past decade. Regular Steel For Fuel readers are certainly familiar with the declining cost of lithium-ion batteries, which is important, because electricity tends to be the optimal choice for powering autonomous systems. Meanwhile the price of GPUs and related microchips, which make up the brains of these systems, have fallen by more than 95%.9

And so, the board is set. All of the pieces are now in place for a robotics revolution.

The robots are coming

What will they look like?

There are a number of very well-funded operations pursuing “humanoid” form factors. The thesis behind humanoids is not hard to understand: So much of our world was built by humans, for humans — hence, bipedal robots with two arms, two hands, and opposable thumbs should fit right in. Humanoid robotic models are also naturally well suited for training via “imitation learning” — Tesla is reportedly hiring workers for nearly $50 an hour to wear motion capture suits in order to train the company’s humanoid robots.

Plus, decades of science fiction have conditioned us to look for recognizable features in our creations…

Perhaps humanoids will indeed be necessary if we’re ever going to reach “Plumber” on the Spectrum of Robotic Capabilities. Personally, I’m not so sure. I can see more value being created by a diverse panoply of robots, embodied in a wide range of form factors, each engineered for a distinct set of tasks. This is the course that nature took, after all, as life evolved to fill millions of disparate ecological niches. I don’t see any more reason why a humanoid would be the ideal form factor for installing solar panels than why a humanoid would be the ideal form factor for traversing a coral reef.

If I’m correct, then we should all be preparing for a Cambrian explosion of robots entering nearly every corner of the physical economy.

And I, for one, will welcome them.

Here’s to “Physical AI” in all its glorious forms!

…Or a computer speaker.

Sidenote: For an excellent assessment of the current strengths and weaknesses of LLMs as a research tool, I’d recommend the ever-sharp tech analyst Benedict Evans on “The Deep Research Problem”.

This trend in stagnant manufacturing & construction productivity is similar in many other advanced, wealthy nations.

Although, there are a small number of highly specialized technicians trained to make sense of vibration data…

ArkInvest “Big Ideas 2024”, with data from the International Federation of Robotics.

I’m using “he” to describe this theoretical plumber because the vast majority of plumbers are men.

I’m sorry to say this is once again a Chinese manufacturing story. In fact, a single Chinese company, DJI, is by far the dominant supplier of both consumer and commercial drone hardware, globally.

Waymo’s competitors include Tesla, Amazon (Zoox), Chevy (Cruise)… and a host of failed startups.

GPU = “Graphical Processing Unit”, which is the class of computer chip commonly used to train and run AI models.